Below is a lengthy presentation of the framework — if you want to jump straight in, go directly to the complete RAIIF framework or the Simplified Assessment Form.

As artificial intelligence advances it offers great potential to profoundly transform our society. Venture capitalists and tech investors have the ability to direct investments so as to build AI systems that are safe and sustainable. We have therefore created the Responsible AI Investment Framework (RAIIF) as a resource for tech investors and venture capitalists of all stages in their mapping and evaluation of ethical and practical AI risks.

*Disclaimer — the model takes a practical governance, leadership and team-centric approach. An in-depth technical due diligence is beyond the scope of this framework.

So, you may ask why?

- Regulators, researchers and policymakers are already laying the groundwork for norms and theoretical frameworks in AI ethics.

- Builders, investors and consumers are moving even faster. The companies we invest in today are thought leaders actively influencing the impact of AI. And their success hinges on engaging their users, communities, investors, employees and customers around this topic.

- As investors and sponsors of the development of AI tech, we bear responsibility for the long-term strategic choices that will profoundly influence how AI is rolled out and its societal repercussions in the foreseeable future.

We believe the EU AI Act is most likely to become the global regulatory standard of reference — whether you like it or not, alignment will be the way to go, so might as well make it as practical as possible. We embrace ethical alignment as a way for business to create a dialog with customers and in order to achieve safe, better long term sustainable outcomes for businesses, and to avoid AI posing substantial risks to humanity.

Here is an open access link to the complete models and framework. The framework allows for a simplified or extended assessment depending on the stage/size/maturity of the company you are evaluating.

Before diving into the framework, I will first give some context and highlight the trends indicating the importance of assessing AI risk, and deploying AI responsibly as technology quickly advances.

Converging Trends

Highlighting the Importance of Investing Responsibly in AI

Deploying AI necessarily implies fundamental ethical (bias, discrimination, security, data protection, mass manipulation, etc) and economical (AI systems deployed in critical infrastructures, displacement of jobs of highly skilled workers, etc) questions and uncertainties that need to be addressed.

There are 3 significant trends — speed of adoption, multidisciplinary AI research and geopolitical power struggles — that highlight the importance of deploying AI responsibly as the technology continues to quickly advance.

Speed of Adoption

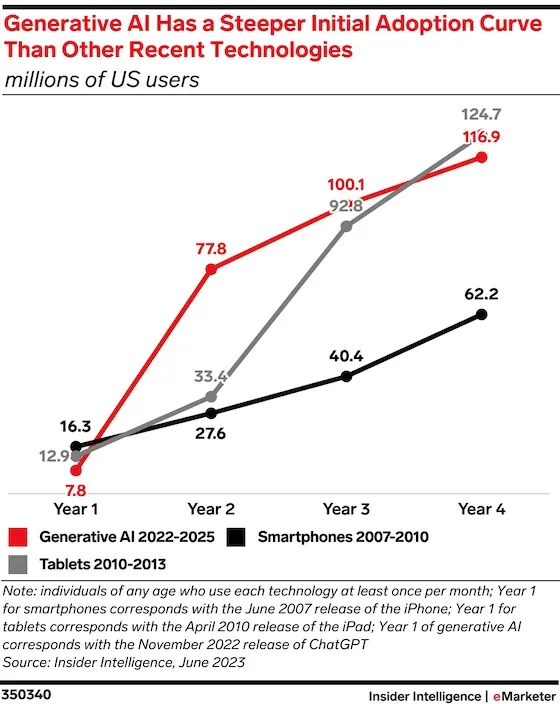

AI is becoming part of our everyday lives and people are turning to AI tools impacting the way we learn, work, communicate and socialise. The speed of adoption (read disruption) is exponential, unlike what we have even seen with the iPhone or tablets.

Implications of AI Ethics

The boom in multidisciplinary research related to AI ethics is a testimony of the underlying complexity and vastness of the field. To name just a few influential research bodies that I’d recommend following: Stanford’s HAI, the Center for AI Safety or Montreal AI ethics Institute.

The Geopolitical Struggle

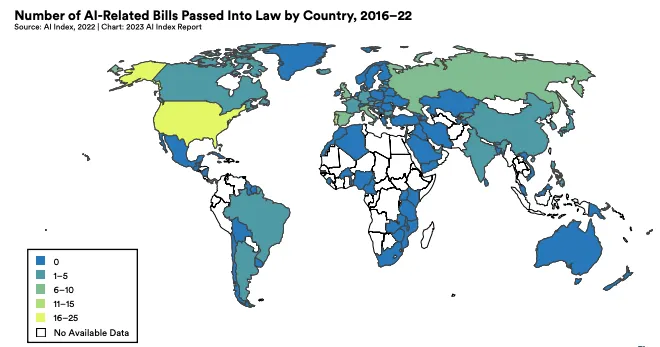

A Stanford analysis of the parliamentary records on AI in 81 countries shows that mentions of AI in global legislative proceedings have increased nearly 6.5 times since 2016. Legislating is not only a means of locally controlling a technology with disruptive and partly unpredictable societal effects, but also a matter of global economic and political influence.

Structure of the Framework

When talking about Responsible AI from the perspective of an investor we refer to the ethical implications to consider when investing, and having an active shareholder ownership in companies that develop and deploy artificial intelligence.

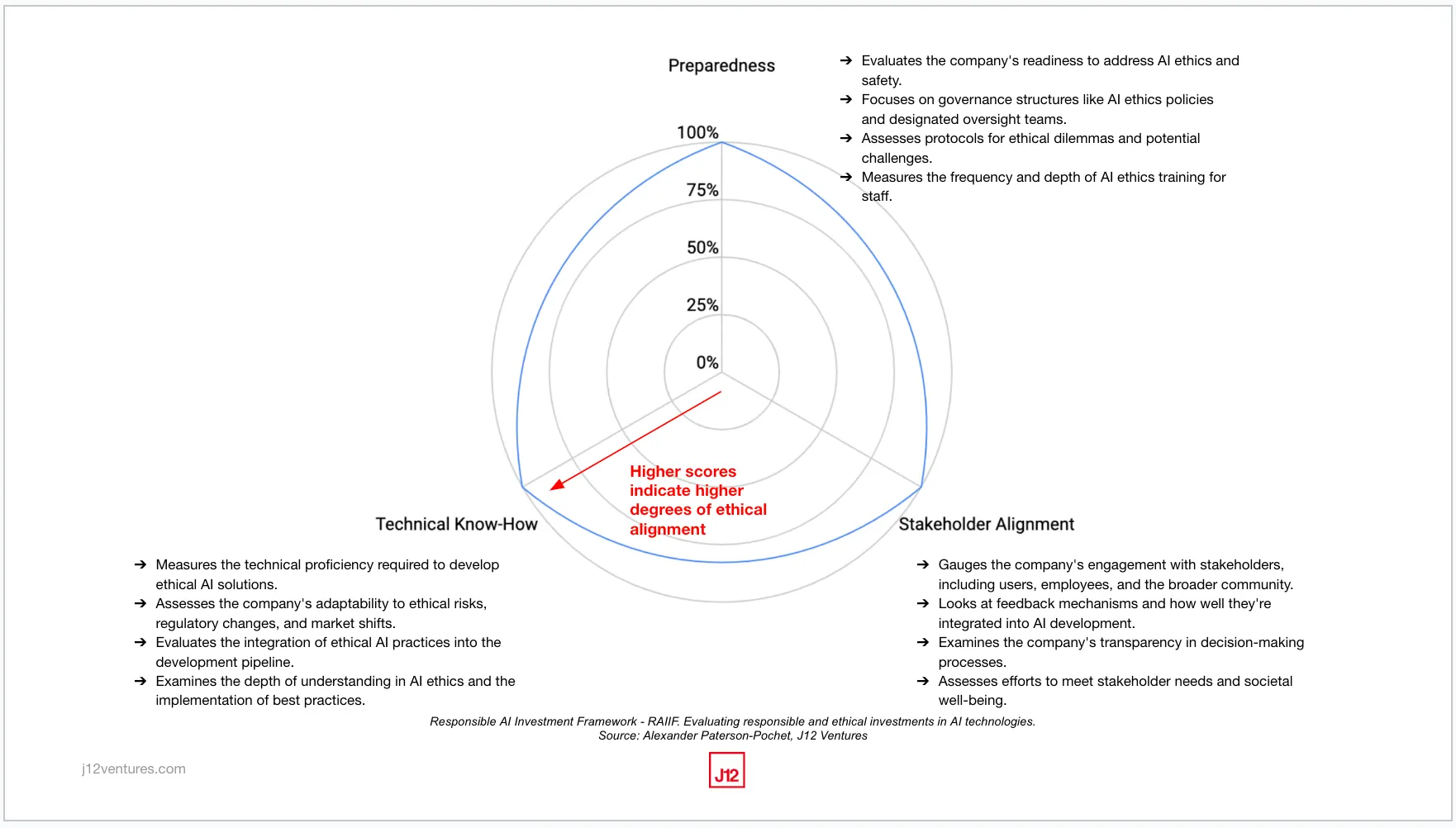

The table above summarises the underlying concepts of the framework and the interplay between them. Through a set of questions the target company is first categorised by risk (Step 1), determining the level of materiality or potential harm. The risk category sets the basis for the minimum level of perceived Preparedness, Stakeholder Alignment and Technical know-how that a company needs to display in order to be deemed investable. A set of questions guides the assessor through the company’s alignment with these three pillars (Step 2). Based on the assessors responses, an overall score is attributed (Step 3) that in combination with the company’s risk category can yield a recommendation on whether the company is investable or not from a responsible AI perspective. The assessor may select different starting parameters to the framework to allow for the depth of questioning or due diligence to be adjusted.

Risk Classification

The risk classification is modeled off of the current proposal for the EU AI Act which has not yet been fully adopted (expected to enter into force early 2025).

The first step involves classifying the target investment based on potential risks related to AI implementation and usage as follows.

Unacceptable Risk

These are AI implementations where the risks are deemed too high to be effectively mitigated or managed. This could involve ethically questionable applications, regulated industries where the magnitude of potential harm is too great, potential for significant harm to users, or breach of fundamental rights. If considering an investment in a company susceptible to present unacceptable risks, be aware that you might be sponsoring potentially very harmful technologies and that the very existence of the company might be subject to a crackdown by regulators. If at the frontier of disruptive innovation and unacceptable risk, the company’s leadership needs to have an exceptionally strong understanding of the ethical, political and regulatory implications at play, and have developed entirely novel and verified ways of effectively and safely mitigating any potential harms.

High Risk

AI applications that have potential risks, but with proper management, mitigation, and oversight, these risks can be controlled. The applications in this category might require regular monitoring and stringent evaluation. The management teams of such companies should exhibit a heightened vigilance towards ethical challenges, possess a proactive risk mitigation mindset, and demonstrate adaptability in navigating complex AI landscapes. Their competencies would ideally include a combination of technical depth, regulatory awareness, and crisis management skills, ensuring they can swiftly address potential pitfalls and ambiguities associated with cutting-edge AI deployments.

Limited Risk

AI technologies that pose minimal risks to users and society. They often have a narrowly defined scope with clear, transparent, and easily understandable functions. The leadership of such companies should demonstrate a proactive commitment to transparency and stakeholder engagement, and have a track record of prioritising safety and inclusivity in AI deployments.

Once the risk category is determined, the target investment is evaluated on the following pillars,

Preparedness

Assesses how ready the company is to address potential AI risks and challenges. Companies that demonstrate high levels of preparedness can gain a competitive edge by showcasing their responsibility and forward-thinking. This can lead to increased trust from investors, customers, and regulators, potentially resulting in faster approvals, greater market share, and higher valuations.

Stakeholder Alignment

Evaluates if the company’s AI goals and implementations align with the interests of stakeholders (users, investors, employees, etc). Aligning with stakeholders can drive customer loyalty, foster strong partnerships, and attract responsible investors. Companies that prioritise this alignment can benefit from positive word-of-mouth, reduced churn, and increased access to capital from ethically-minded investors.

Technical Know-How

Examines the company’s depth of understanding and capability to integrate responsible AI practices into their development pipeline. This involves not just the technical ability to embed ethical AI practices but also the discernment to ensure the resultant technologies uphold ethical standards, are free from biases, and prioritise safety. Companies endowed with strong ethical AI expertise are adept at swift adaptation and innovation in the face of emerging ethical challenges, evolving regulations, and market shifts, thereby guaranteeing enduring progress and resilience.

Reading the Outcomes

The following summarises how an assessor may interpret the results from the questionnaire.

You can also try the framework out yourself or fill in the Simplified Assessment Form.

Risk Assessment

- Unacceptable Risk: These investments are generally avoided, but if there’s a compelling reason to consider them, they’ll need to demonstrate outstanding alignment with responsible AI practices in the pillar evaluation.

- High Risk: These investments have inherent risks, and they’ll need to score highly in the pillar evaluation to be deemed investable.

- Limited Risk: These are inherently safer investments, but they still need to demonstrate a good alignment with responsible AI practices in the pillar evaluation.

Pillar Evaluation Score

For each pillar, the corresponding questions are attributed a score ranging from 0 to 2 points, which is then summed up to indicate an overall score.

Step 1: A score is assigned for each question based on its answer:

- Yes/Positive response (indicate low risk or adherence to best practices) = 2 points

- Partial/Neutral response (indicate areas where the company might be in a gray zone or has some measures in place but not comprehensive ones) = 1 point

- No/Negative response (highlight high-risk areas or potential red flags) = 0 points

Step 2: The framework sums up the scores for each pillar. With (up to) 20 questions per pillar, the maximum score for a pillar is 40 points.

Step 3: Summing up the score obtained in all three pillars, the maximum overall score is 120 points. The score is then normalised on a percentage basis for easier comparability.

Score Interpretation for Investors

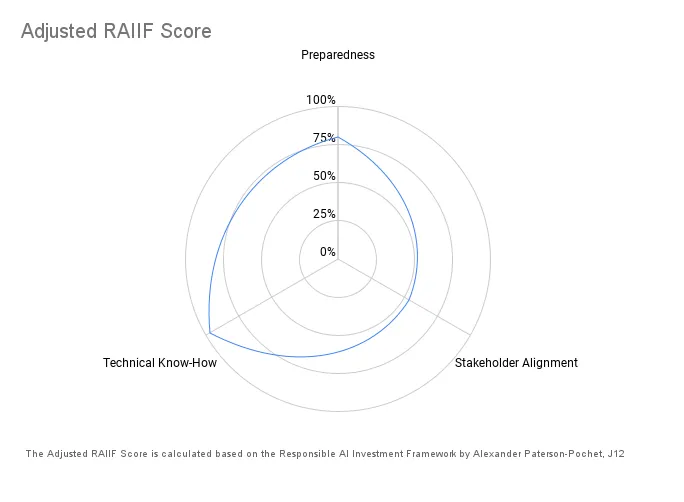

Based on parameters selected by the assessor (the assessor may decide to carry out a more or less expansive due-diligence process based on the company’s size, maturity, risk category or informational gaps), the framework yields a relative and an absolute RAIIF score.

- The Global RAIIF score takes into account the full universe of questions in the framework and therefore gives an absolute measure of the company’s Responsible AI alignment compared to all other companies (small or large) involved in developing or deploying AI.

- The Adjusted RAIIF score on the other hand takes into account only the subset of questions selected and answered by the assessor.

Below are the recommended interpretations based on the Adjusted RAIIF score that was obtained.

Low Risk Companies

- 67%-100%: Excellent alignment with responsible AI practices.

- 54%-66%: Good alignment, suitable for investment with some areas for improvement.

- 42%-53%: Moderate alignment, further due diligence required before investment.

- 0%-41%: Caution advised, significant gaps in responsible AI practices

High Risk Companies

- 83%-100%: Excellent alignment with responsible AI practices.

- 71%-82%: Good alignment, suitable for investment with some areas for improvement.

- 58%-70%: Moderate alignment, further due diligence required before investment.

- 0%-57%: Caution advised, significant gaps in responsible AI practices

Unacceptable Risk Companies

- 92%-100%: Outstanding alignment with responsible AI practices, potentially reconsidering the risk classification.

- 75%-91%: Good alignment, but still caution advised given their initial classification.

- 0%-74%: Not recommended for investment.

Evaluation Example: DeepMind

Let’s take Google DeepMind, an AI company known for its pioneering work on AI for games, health, etc. as an example.

*Disclaimer: we asked GPT-4 to run DeepMind through our extended framework and then adjusted responses using some publicly available data. This example is therefore purely illustrative and I’m sure the fine people at DeepMind would have a lot more to add.

Risk Classification

High Risk

DeepMind works on numerous AI projects that are generally beneficial, yet the potential implications of its technologies, especially in healthcare, make it a high-risk entity.

Evaluation Based on the Pillars

Preparedness (80%)

- DeepMind has a history of collaboration with experts and institutions, indicating a level of readiness to address AI challenges.

- They have collaborated with the NHS, showing a willingness to work within established frameworks.

Stakeholder Alignment (54%)

- DeepMind Health’s use of NHS patient data has raised concerns in the past. However, they have made efforts to be transparent and address these concerns.

- They regularly publish research, ensuring a level of transparency with the broader scientific community.

Technical Know-How (97%):

- Being at the forefront of AI research, DeepMind has proven technical expertise in AI.

- They have consistently showcased their AI’s capabilities, like the success of AlphaGo.

Overall Adjusted RAIIF Score: 79%

Investment recommendation

DeepMind would be classified as high risk due to the potential implications of its technologies and require an extended due diligence. It scores favourably on the pillars of preparedness, stakeholder alignment, and technical know-how.

DeepMind shows good alignment with the framework and would therefore be suitable for investment with some areas for improvement.